How Snowflake plans to make Data Cloud a de facto standard

When Frank Slootman took ServiceNow Inc. public, many people undervalued the company, positioning it as just a better help desk tool. It turns out the firm actually had a massive total available market expansion opportunity in information technology service management, human resources, logistics, security, marketing and customer service management.

NOW’s stock price followed the stellar execution under Chief Executive Slootman and Chief Financial Officer Mike Scarpelli’s leadership. When they took the reins at Snowflake Inc., expectations were already set that they’d repeat the feat but this time, if anything, the company was overvalued out of the gate.

It can be argued that most people didn’t really understand the market opportunity any better this time around — other than that it was a bet on Slootman’s track record of execution… and data. Good bets; but folks really didn’t appreciate that Snowflake wasn’t just a better data warehouse, that it was building what the company calls a Data Cloud… and what we’ve termed a data supercloud.

In this Breaking Analysis and ahead of Snowflake Summit, we’ll do four things: 1) Review the recent narrative and concerns about Snowflake and its value; 2) Share survey data from Enterprise Technology Research that will confirm almost precisely what the company’s CFO has been telling anyone who will listen; 3) Share our view of what Snowflake is building – namely, trying to become the de facto standard data platform; and 4) Convey our expectations for the Snowflake Summit next week at Caesar’s Palace in Las Vegas.

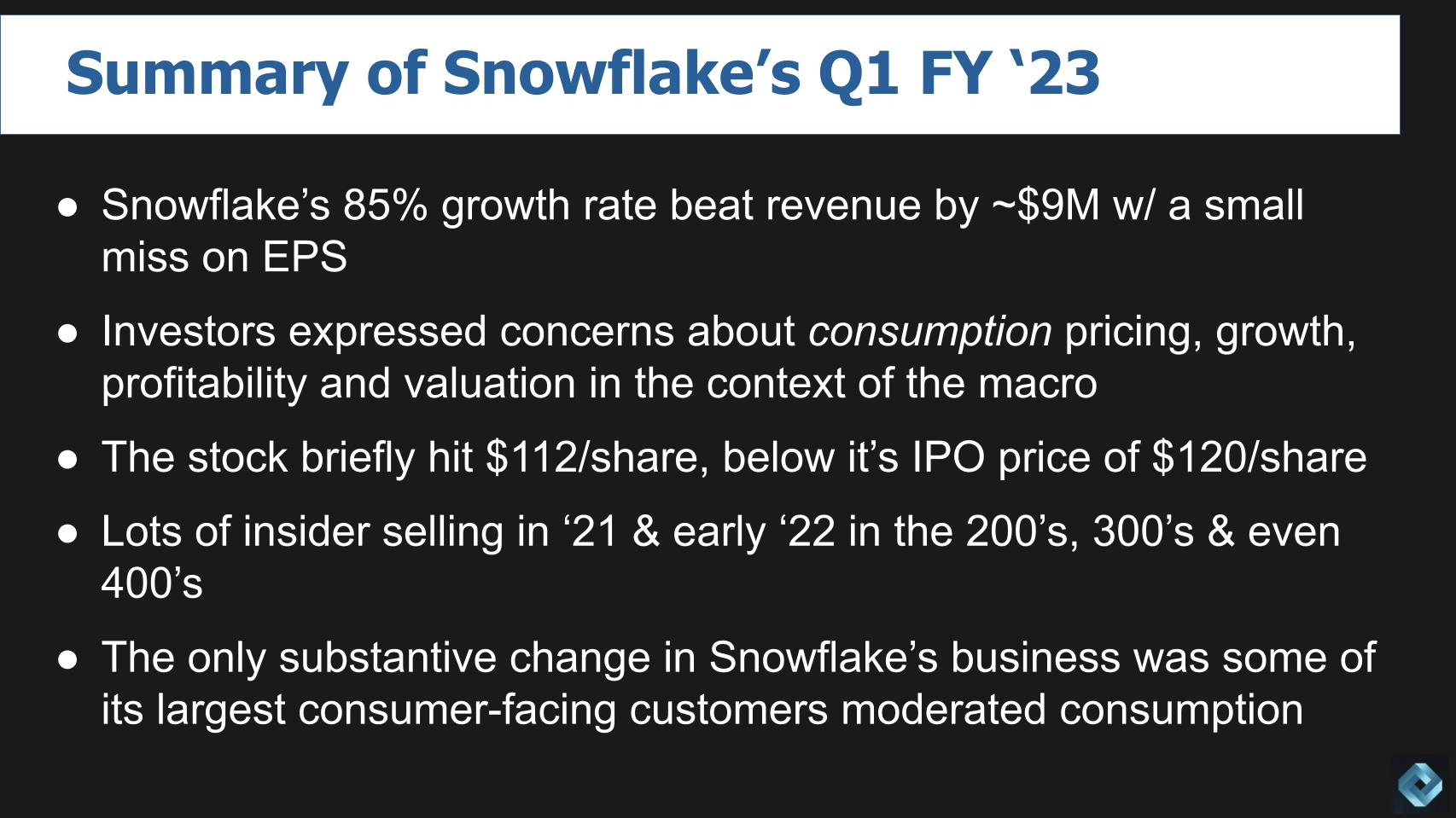

Investors expect Snowflake to beat, not meet

Snowflake’s most recent quarterly results have been well-covered. The company basically hit its targets, which for Snowflake investors was bad news. Wall street piled on, expressing concerns about Snowflake’s consumption pricing model, slowing growth rates, lack of profitability and valuation given the current macro market conditions.

After earnings, the stock dropped below its initial public offering price, which you couldn’t touch on day one, by the way, as the stock opened well above that price. Investors also expressed concerns about some pretty massive insider selling throughout 2021 and early 2022. The stock is down around 63% year to date.

But the only real substantive change in the company’s business is that some of its largest consumer-facing customers, while still growing, dialed back their consumption this past quarter. The tone of the call was not contentious per se. But Scarpelli seemed to be getting somewhat annoyed with the implication from some analysts’ questions that something is fundamentally wrong with Snowflake’s business.

Unpacking the dynamics of Snowflake’s business

First, let’s talk about consumption pricing. On the earnings call, one of the analysts asked if Snowflake would consider more of a subscription-based model so that they could better weather such fluctuations in demand. Before the analyst could finish the question, Scarpelli emphatically said “NO!” The analyst might as well have asked, “Hey Mike, have you ever considered changing your pricing model and screwing your customers the same way most legacy SaaS companies lock their customers in… so you could squeeze more revenue out of them?

Q. Mike, have you ever considered changing your pricing model [to subscription] and screwing your customers the same way most legacy SaaS companies lock their customers in?

A. NO!

Consumption pricing is one of the things that makes a company like Snowflake so attractive — because customers, especially large customers facing fluctuating demand, can dial down usage for certain workloads that are not yet revenue-producing.

Now let’s jump to insider trading. There was a lot of insider selling going on last year and into 2022. Slootman, Scarpelli, Christian Kleinerman, Mike Speiser and several board members sold stock worth a lot of money. At prices in the 200s, 300s and even 400s. Remember the company at one point was valued at $100 billion, surpassing the value of ServiceNow… which is just not right at this point in Snowflake’s journey. The insiders’ cost basis was very often in the single digits. So on the one hand, one can’t blame them – what a gift. And as icon investor Peter Lynch famously said, “Insiders sell for many reasons but they buy for only one.”

But there wasn’t a lot of insider buying of the stock when it was in the 300s and above. And this pattern is something to watch. Are insiders buying now? We’ll keep watching. Snowflake is pretty generous with stock-based compensation and insiders still own plenty of shares, so maybe not, but we’ll see in future disclosures.

Regardless, the bottom line is Snowflake’s business hasn’t dramatically changed with the exception of these large, consumer-facing companies.

Which consumer-facing customers are slowing down consumption?

Another analyst pointed out that companies such as Snap Inc., Peloton Interactive Inc., Netflix Inc. and Meta Platforms Inc. have been cutting back and Scarpelli said, in what was a bit of a surprise: “Well, I’m not going to name the customers, but it’s not the ones you mentioned.” We thought a good followup would have been: “How about Walmart, Target, Visa, Amex, Expedia, Priceline or Uber… any of those, Mike?”

We doubt he would have answered – #LOL!

Update to Snowflake’s 2029 model assumptions

One thing Scarpelli did do is update Snowflake’s fiscal 2029 outlook, to emphasize the long-term opportunity the company sees.

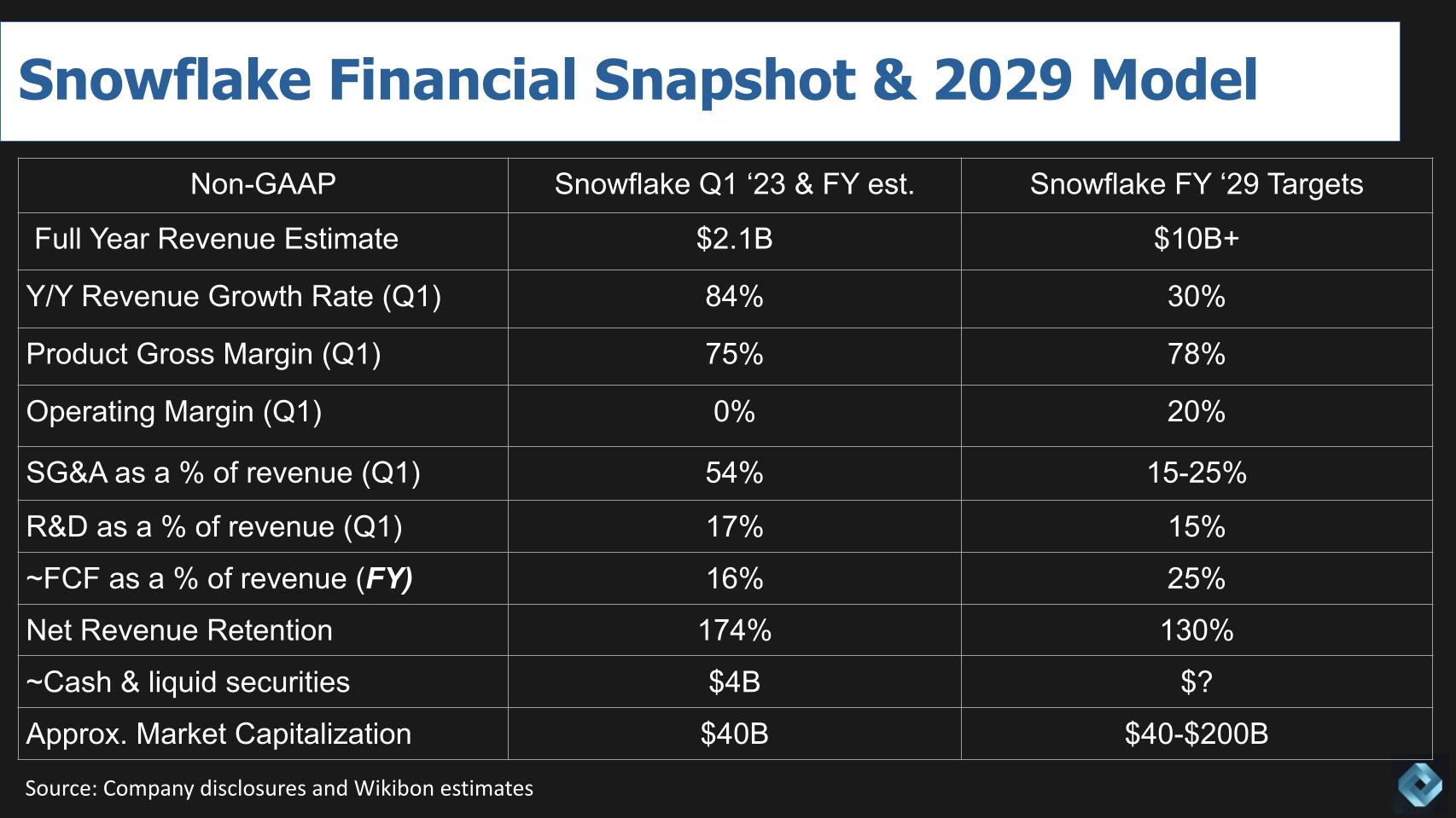

The chart above shows a financial snapshot of Snowflake’s current business, using a combination of quarterly and full-year numbers, and a model of what the business will look like in 2029, based on the company’s disclosures and our estimates.

Snowflake this year will surpass $2 billion in revenue and is targeting $10 billion-plus by 2029. Its current growth rate is 84%, targeting a robust 30% growth in the out years. Gross margins will tick up a bit, but remember, Snowflake’s cost of goods sold is dominated by its cloud costs – it has to pay Amazon Web Services, Microsoft Azure and Google Cloud for its infrastructure. But high 70s is a good target. Snowflake has a tiny operating margin today and is targeting 20% in 2029, so that would be $2 billion annually. You would certainly expect its operating leverage in the out years to enable much lower selling, general and administrative costs than the current 54%. R&D will stay healthy.

But the real interesting number to watch is free cash flow. Snowflake will deliver free cash flow at 16% of revenue this year, growing to 25% by 2029 – so $2.5 billion in FCF in the out years, up from previous long-term forecasts. And expect the net revenue retention to moderate but still be well over 100%.

Today Snowflake and every other stock is well off; this morning the company had a $40 billion value, dropping below that midday, but let’s stick with $40 billion. And who knows what the stock will be valued at in 2029 – no idea but let’s say between $40 billion and $200 billion. It could get even uglier in the market as interest rates rise and if inflation stays high — until we get a Paul Volcker-like action from the Fed chair. Let’s hope we don’t have a repeat of the 1970s.…

Scenario analysis of Snowflake’s long-term returns

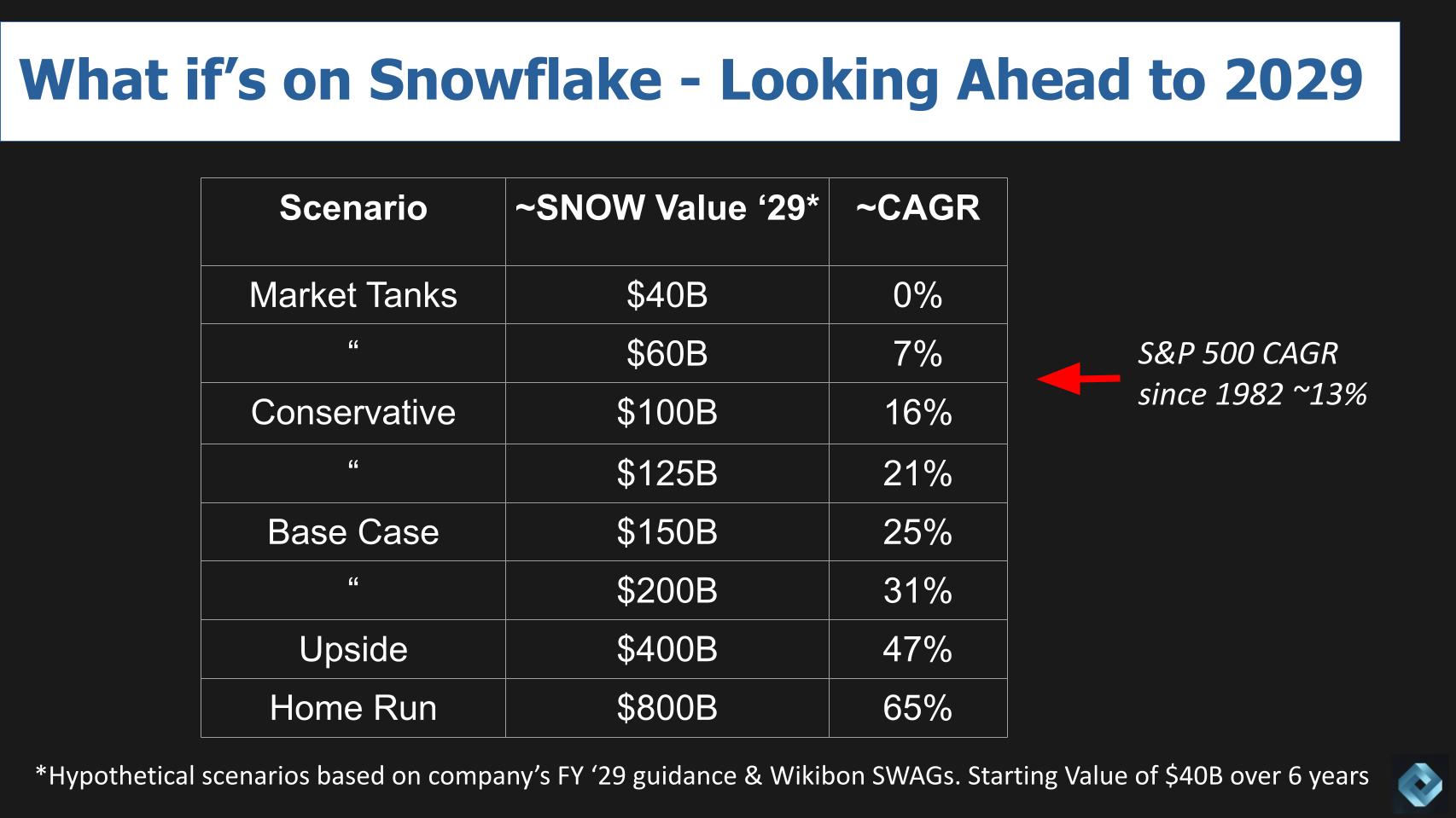

Let’s do a sensitivity analysis of Snowflake’s long-term returns based on Scarpelli’s 2029 projections to see if you think a long-term investment in the company is worth the inherent risk and volatility of the stock.

What we’ve assumed in this chart above is a current valuation at about $40 billion and run a compound annual growth rate through 2029 with our estimates of valuation at that time. We’ve noted the S&P 500’s approximate CAGR since 1982.

Stock market tanks. If the market tanks and Snowflake’s value stays between $40 billion and $60 billion, investors get a 0% to 7% return, which would be a major disappointment if the company performs as projected.

Conservative case. Our conservative valuation case ranges from $100 billion to $125 billion. This would outperform the historical S&P returns but given the volatility and risks might not be so alluring to investors.

Base case. Our base case is a valuation between $150 billion and $200 billion and delivers a CAGR between 25% and 31%. Approximate software valuation comps in today’s market would be Adobe (about $15 billion in revenue growing at 15% with a $200B billion value); VMware ($12 billion in revenue growing at 3% with a $60 billion value); Salesforce ($20 billion revenue growing at 20% with a $180 billion value); ServiceNow ($5 billion in revenue growing at 26% with a $100 billion valuation); and Intuit ($12 billion in revenue, growing at 35% with a $107 billion value).

Upside and home run. As you get to a $400 billion valuation, which would exceed revenue growth, you get an average annual return of 47% and at our home run scenario of $800 billion, a 65% CAGR.

Could Snowflake beat the base-case projections? Absolutely. Could the market perform at the optimistic end of the spectrum? Certainly it could outperform the base-case levels. Could it not perform at those levels? You bet. But hopefully this gives a little context to Scarpelli’s framework. Notwithstanding the market’s unpredictability, Snowflake looks like it’s going to continue an amazing run compared with software companies historically.

Survey data confirms Scarpelli’s explanations

Let’s look at some ETR survey data and see the degree to which it aligns with what Snowflake is telling the Street:

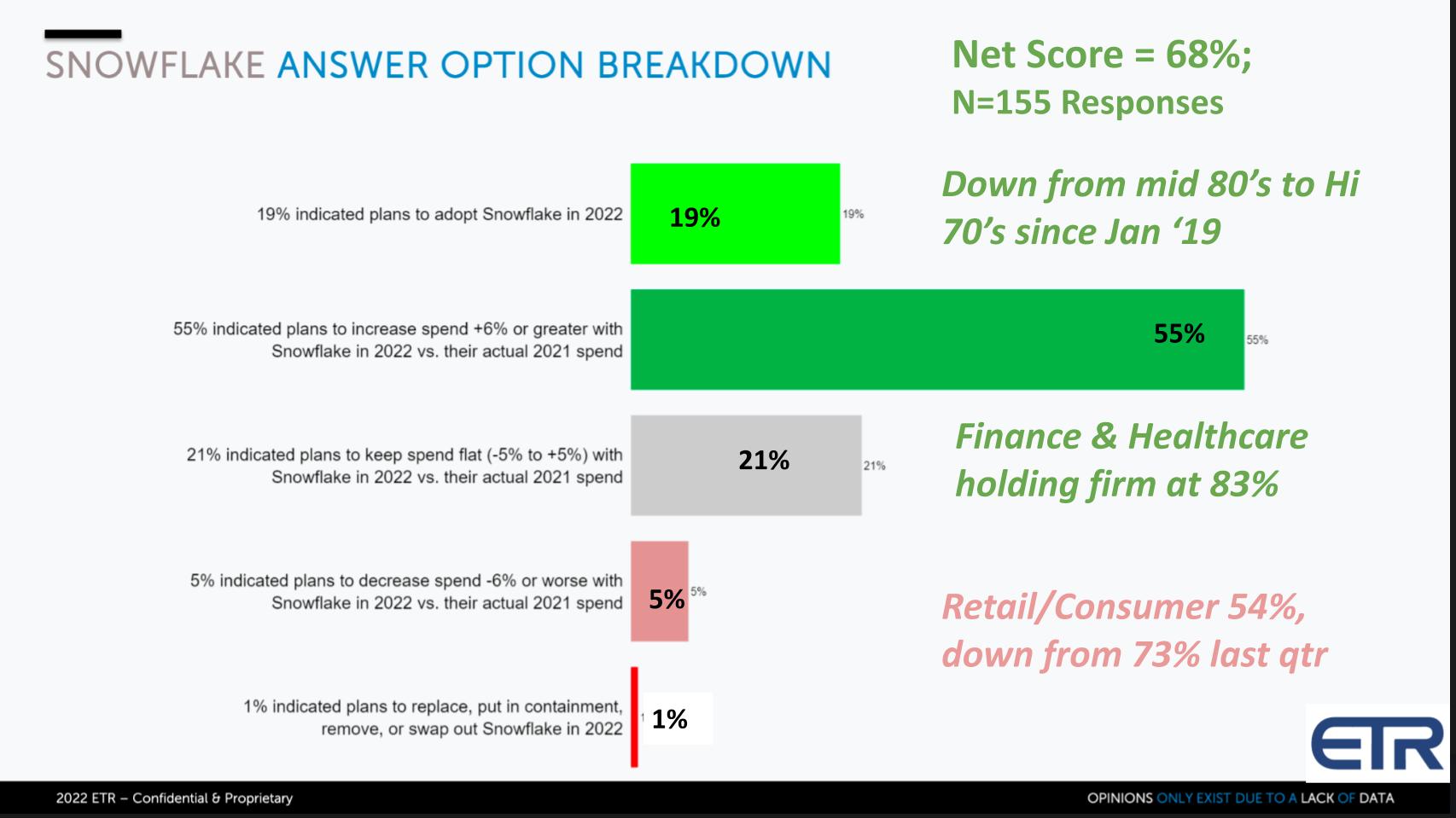

The chart above shows the breakdown of Snowflake’s Net Score. Net Score is ETR’s proprietary methodology that measures the percent of customers in their survey that are adding the platform new – that’s the lime green at 19%; Existing customers spending 6% or more on the platform – that’s the forest green at 55%. Flat spend – the gray at 21%. Decreasing spending – the pinkish at 5%, and churning, the red at only 1%. Subtract the reds from the greens and you net out to 68%. An impressive Net Score by ETR standards. But… down from the high 70s and mid-80s where Snowflake has been since 2019.

Note that this survey of 1,500 or so organizations includes 155 Snowflake customers. What was really interesting is when we cut the data by industry sector. Two of Snowflake’s most important verticals are finance and healthcare. Both are holding a Net Score in the company’s historic range of 83%. But retail/consumer showed a dramatic decline this past survey from 73% down to 54% in three months time.

ETR survey data shows that the sector showing the steepest decline in Net Score was retail/consumer – from 73% last quarter to 54% in the April survey.

So this data aligns almost perfectly with what Scarpelli has been telling the street.

Visualizing Snowflake’s spending velocity and market penetration over time

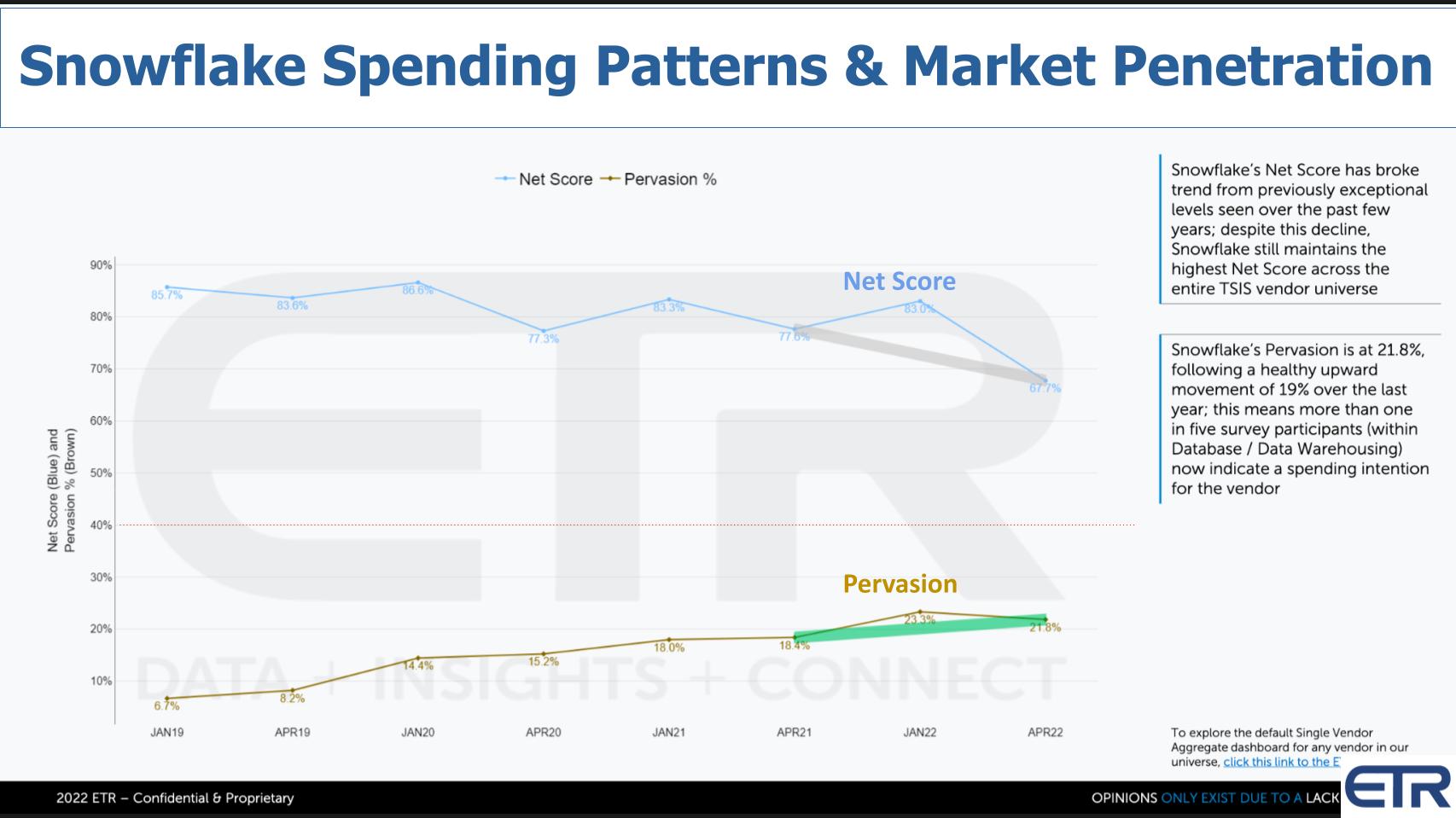

The chart below shows time series data for Net Score (spending momentum) and Pervasion, meaning how penetrated Snowflake is in the survey.

As a reminder, Net Score measures the net percent of customers spending more on a specific platform. Pervasion measures presence in the data set and is a proxy for market penetration. You can see the steep downward trend in Net Score this past quarter. Now for context, note the red line on the vertical axis at 40%. That is a kind of magic number. We interpret any company above that as best in class. Snowflake is still well above that line… but the April survey, as we reported on May 7 in detail, shows a meaningful break in the Snowflake trend.

On the bottom brownish line you can see a steady rise in the survey, which is a proxy for Snowflake’s overall market penetration… steady up and to the right.

Despite the momentum decline, Snowflake remains above other platforms

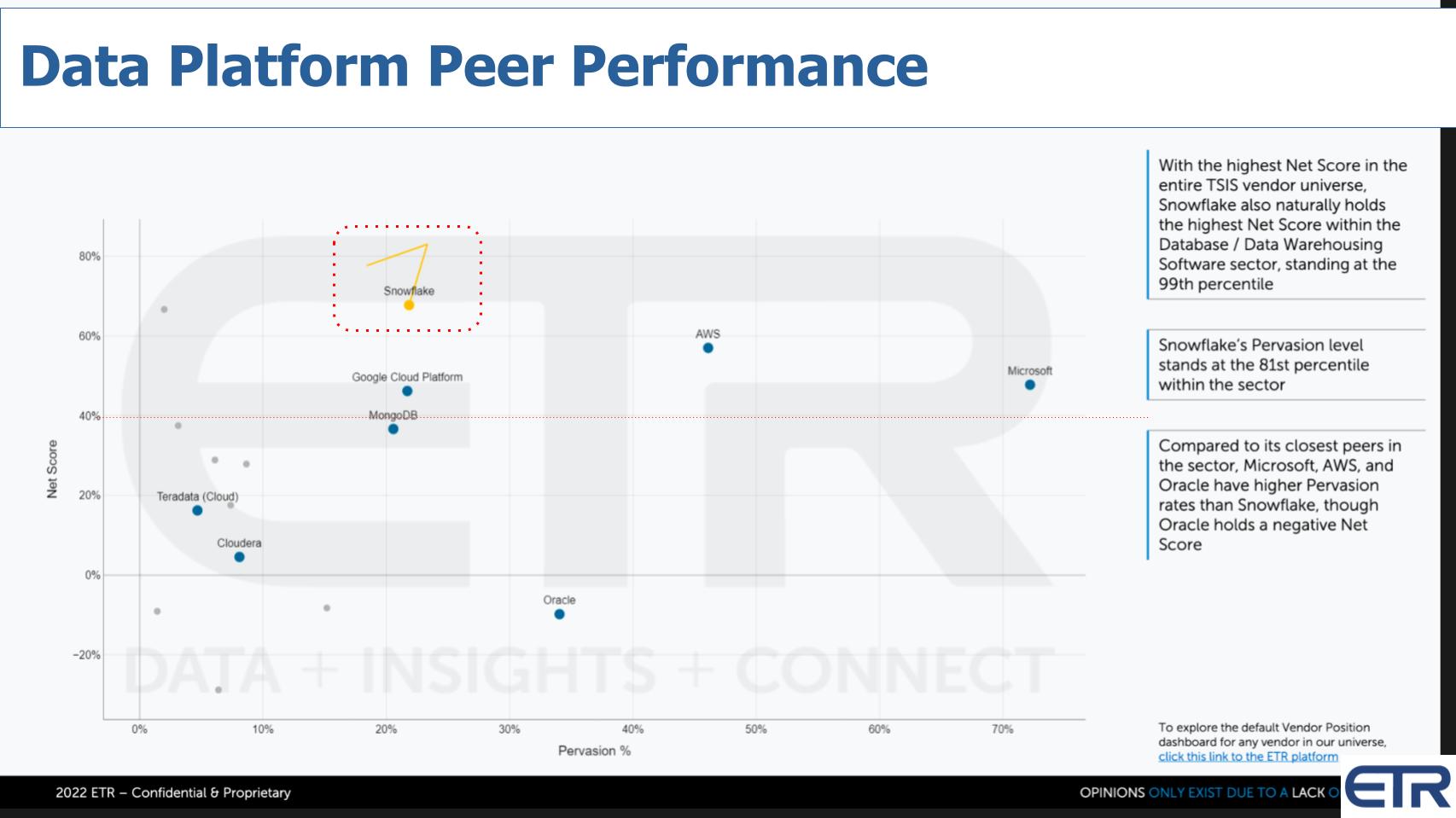

In the chart below we show a different view on the previous data set by comparing some of Snowflake’s peers and other data platforms:

This XY graph shows Net Score on the vertical axis and Pervasion on the horizontal with the red dotted line at 40%. You can see from the ETR call-outs on the right that Snowflake, while declining in Net Score, still holds the highest score in the survey and of course in the data platforms sector. Although Snowflake’s spending velocity outperforms that of AWS and Microsoft data platforms, those two are still well above the 40% line with a stronger market presence in the category than Snowflake. You see Google Cloud and MongoDB right around the 40% line.

Now we reported on Mongo last week, and discussed the commentary on consumption models. We referenced Raimo Lenschow’s research note that rewarded MongoDB for its forecasting transparency and less likelihood of facing consumption headwinds.

We’ll reiterate what we said last week: Snowflake, while seeing demand fluctuations this past quarter from those large customers, is not like a data lake where you just shove data in and figure it out later (i.e. no schema on write). That type of work is going to be more discretionary. When you bring data into Snowflake, you have specific intents of driving insights that lead to action and value creation.

Further, as Snowflake adds capabilities and expands its platform features, innovations and ecosystem, more and more data products will be developed in the Snowflake Data Cloud. By data products we mean products and services that are conceived by business users and that can be directly monetized — not just via analytics but through governed data sharing.

Snowflake’s Data Cloud is not just a better EDW – it’s a data ‘supercloud’

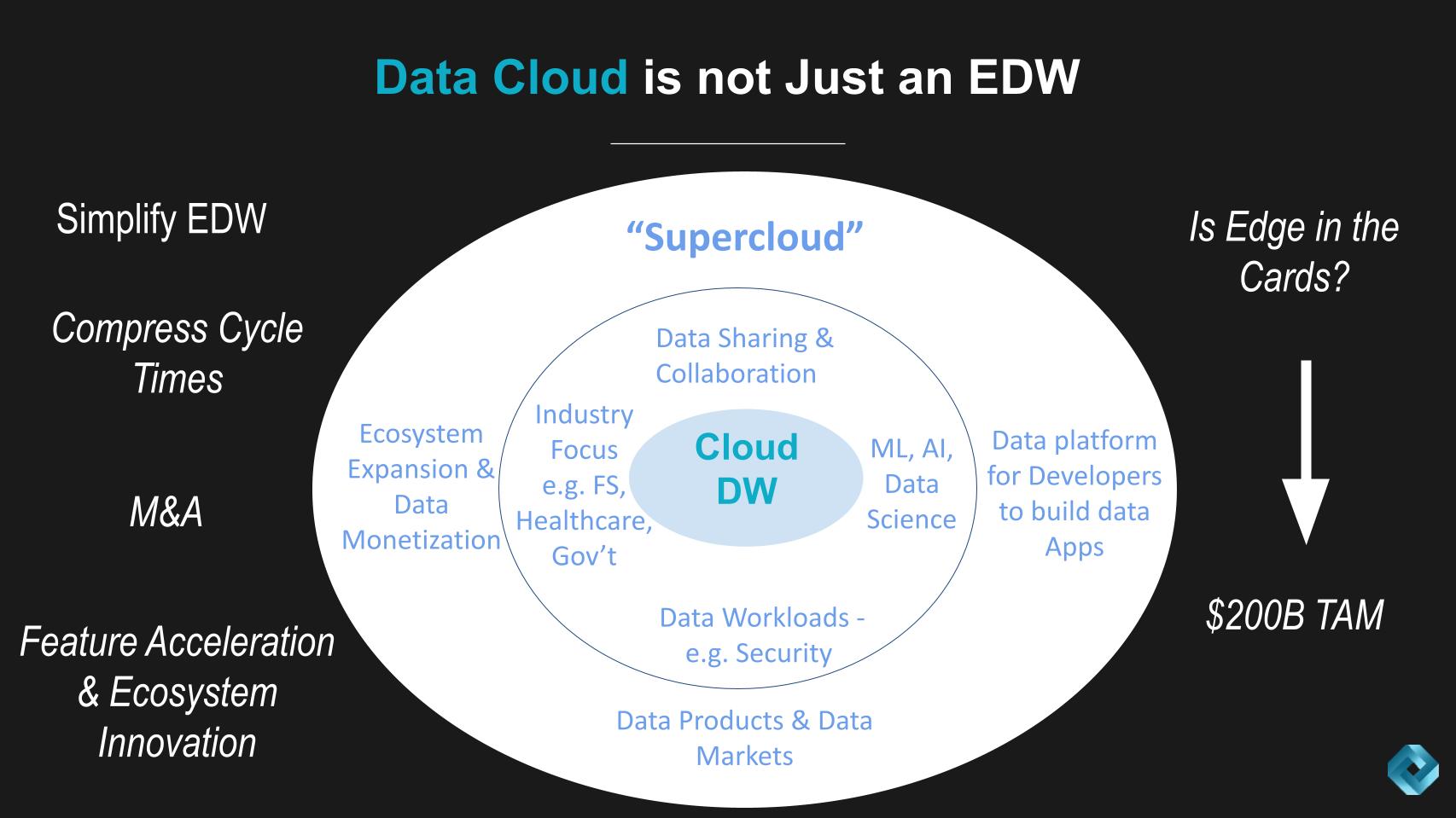

Below is a picture of Snowflake’s opportunity and Data Cloud vision as we see it:

The above graphic is a spin on our Snowflake total available market chart that we’ve published many times. The key point goes back to our opening statements. The Snowflake Data Cloud is evolving well beyond a simpler, cloud-based enterprise data warehouse. Snowflake is building what we often refer to as a supercloud. That is an abstraction layer that comprises rich features and leverages the underlying primitives and application programming interfaces of the cloud providers and adds new value beyond infrastructure.

That value is expressed on the left in terms of compressed cycle times. Snowflake uses the example of a pharmaceutical company compressing time to discover a drug by years. Great example. There are many others. Through organic development and ecosystem expansion, Snowflake will accelerate feature delivery. Snowflake’s Data Cloud vision is not about vertically integrating all functionality into its platform. Rather it’s about delivering secure, governed, facile and powerful analytics and data sharing capabilities to customers, partners and a broad ecosystem.

Ecosystem is how Snowflake fills gaps in its platform and accelerates feature delivery. By building the best cloud data platform in the world in terms of collaboration, security, governance, developer friendliness, machine intelligence, and the like, Snowflake plans to create a de facto standard in data platforms.

Get your data into the Snowflake Data Cloud and all the native capabilities will be available to you.

Is that a walled-garden, proprietary strategy? Well, that’s an interesting question. Openness is a moving target. It’s definitely proprietary in the sense that Snowflake is building something that is highly differentiable, exclusive to Snowflake and not open source. But, like the cloud, the more open capabilities Snowflake can add to its platform, the more language support, open APIs and the like, the more developer-friendly it becomes and the greater the likelihood people will gravitate toward Snowflake.

Zhamak Dehghani, creator of the data mesh concept, might bristle at that and favor a more open-source version of Snowflake. But practically speaking, she would recognize we’re a long ways from that and, we think, see the benefits of a platform that, despite requiring data to be inside a data cloud, can distribute data globally, enable facile, governed and computational data sharing and, to a large degree, be a self-service platform for data product builders.

This is how we see the Snowflake Data Cloud vision evolving. Is edge part of that vision? We think that is going to be a future challenge where the ecosystem is going to have to come into play to fill gaps. If Snowflake can tap the edge, it will bring even more clarity as to how the company expands into what we believe is a massive $200 billion TAM as shown in the chart above.

What to watch at Snowflake Summit next week

Let’s close on next week’s Snowflake Summit in Las Vegas.

TheCUBE is excited to be there. Lisa Martin will be co-hosting and we’ll have Frank Slootman on as well as Christian Kleinerman, Benoit Dageville, Constellation’s Doug Henschen, Dave Menninger, Sanjeev Mohan and Tony Baer from dbInsight… along with several other Snowflake experts, analysts, customers and a numerous ecosystem partners.

We spoke with Sanjeev Mohan prior to recording this Breaking Analysis to riff on what we should watch for at Snowflake Summit this coming week. Above is a summary of what we’ll be looking for.

- Evolution of the Data Cloud. Evidence that our view of the Snowflake Data Cloud is taking shape and evolving in the way we showed on the previous chart;

- Streamlit integration. Where is Snowflake at with its Streamlit acquisition? Streamlit is a data science play and an expansion into Databricks Inc.’s territory with open-source Python libraries and machine learning. Increasingly, we see Snowflake responding to market friction that it’s a closed system and we expect a number of developer-friendly initiatives from the company;

- Developers. We expect to hear some discussion, hopefully a lot, about developers. Snowflake has a dedicated developer conference in November, so we expect to hear more about that.

- Leveraging Snowpark. How is Snowflake advancing Snowpark? It has announced a public preview of programming for unstructured data and data monetization along the lines of what we suggested earlier – coming to the Snowflake Data Cloud to develop products that can be monetized and have all the bells and whistles of native Snowflake, and eliminating the heavy lifting of data sharing, governance and rich analytics capabilities.

- New workloads. Snowflake has already announced a new workload this past week in security and we will be watching for others.

- Ecosystem. Finally, what’s happening in the ecosystem? One of the things we noted covering ServiceNow in its post-IPO early adult years was the slow pace of ecosystem development. ServiceNow had some niche system integrators and eventually the big guys came in… and you had some other innovators circling the mothership… but generally we see Slootman emphasizing ecosystem growth much more at Snowflake than with his previous company. And that is a fundamental requirement of any modern cloud company in our view.

To paraphrase the crazy, sweaty man on stage, Steve Ballmer: ”Developers developers developers… Yes!” Well… developers want optionality and they get that from ecosystem, ecosystem, ecosystem!

And that’s how we see the current and future state of Snowflake.

Keep in touch

Thanks to Stephanie Chan, who researches topics for this Breaking Analysis. Alex Myerson is on production, the podcasts and media workflows. Special thanks to Kristen Martin and Cheryl Knight, who help us keep our community informed and get the word out, and to Rob Hof, our editor in chief at SiliconANGLE. And special thanks this week to Andrew Frick, Steven Conti, Anderson Hill, Sara Kinney and the entire Palo Alto team.

Remember we publish each week on Wikibon and SiliconANGLE. These episodes are all available as podcasts wherever you listen.

Email [email protected], DM @dvellante on Twitter and comment on our LinkedIn posts.

Also, check out this ETR Tutorial we created, which explains the spending methodology in more detail. Note: ETR is a separate company from Wikibon and SiliconANGLE. If you would like to cite or republish any of the company’s data, or inquire about its services, please contact ETR at [email protected].

Here’s the full video analysis:

All statements made regarding companies or securities are strictly beliefs, points of view and opinions held by SiliconANGLE media, Enterprise Technology Research, other guests on theCUBE and guest writers. Such statements are not recommendations by these individuals to buy, sell or hold any security. The content presented does not constitute investment advice and should not be used as the basis for any investment decision. You and only you are responsible for your investment decisions.

(Disclosure: Many of the companies cited in Breaking Analysis are sponsors of theCUBE and/or clients of Wikibon. None of these firms or other companies have any editorial control over or advanced viewing of what’s published in Breaking Analysis.)