Synthetic Media: How deepfakes could soon change our world – 60 Minutes

You might never have read the phrase “synthetic media”— far more typically acknowledged as “deepfakes”— but our army, law enforcement and intelligence businesses definitely have. They are hyper-sensible movie and audio recordings that use synthetic intelligence and “deep” discovering to develop “faux” content or “deepfakes.” The U.S. governing administration has developed more and more concerned about their likely to be utilised to unfold disinformation and dedicate crimes. Which is due to the fact the creators of deepfakes have the electricity to make persons say or do nearly anything, at least on our screens. As we initially claimed in October, most People have no plan how much the technologies has come in just the previous five yrs or the risk, disruption and alternatives that come with it.

Deepfake Tom Cruise: You know I do all my have stunts, naturally. I also do my have tunes.

Chris Ume/Metaphysic

This is not Tom Cruise. It is really a person of a sequence of hyper-reasonable deepfakes of the film star that began appearing on the movie-sharing application TikTok in February 2021.

Deepfake Tom Cruise: Hey, what’s up TikTok?

For days people today puzzled if they had been authentic, and if not, who experienced produced them.

Deepfake Tom Cruise: It’s critical.

Ultimately, a modest, 32-12 months-old Belgian visual effects artist named Chris Umé, stepped forward to claim credit rating.

Chris Umé: We thought as very long as we’re making clear this is a parody, we’re not executing nearly anything to damage his picture. But right after a couple video clips, we realized like, this is blowing up we’re finding thousands and thousands and thousands and thousands and hundreds of thousands of sights.

Umé suggests his perform is made less complicated for the reason that he teamed up with a Tom Cruise impersonator whose voice, gestures and hair are almost identical to the actual McCoy. Umé only deepfakes Cruise’s encounter and stitches that on to the serious video and sound of the impersonator.

Deepfake Tom Cruise: That is in which the magic takes place.

For technophiles, DeepTomCruise was a tipping issue for deepfakes.

Deepfake Tom Cruise: However bought it.

Invoice Whitaker: How do you make this so seamless?

Chris Umé: It begins with instruction a deepfake product, of course. I have all the experience angles of Tom Cruise, all the expressions, all the feelings. It will take time to produce a genuinely excellent deepfake model.

Monthly bill Whitaker: What do you imply “training the model?” How do you coach your laptop?

Chris Umé: “Coaching” signifies it really is going to assess all the visuals of Tom Cruise, all his expressions, when compared to my impersonator. So the computer’s gonna instruct by itself: When my impersonator is smiling, I’m gonna recreate Tom Cruise smiling, and which is, which is how you “practice” it.

Chris Ume/Metaphysic

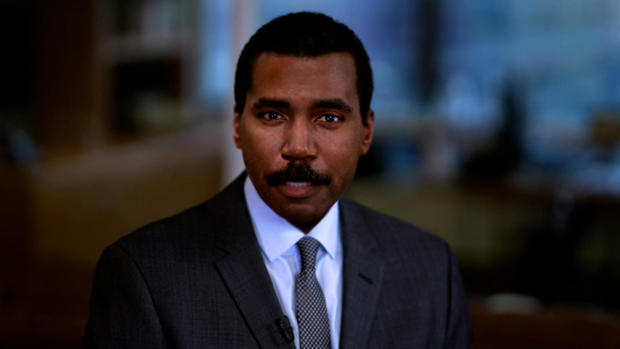

Applying video from the CBS Information archives, Chris Umé was capable to practice his personal computer to learn every single element of my face, and wipe away the many years. This is how I looked 30 years back. He can even eliminate my mustache. The opportunities are countless and a minor horrifying.

Chris Umé: I see a ton of blunders in my get the job done. But I will not intellect it, in fact, due to the fact I don’t want to idiot people today. I just want to present them what’s achievable.

Monthly bill Whitaker: You really don’t want to idiot individuals.

Chris Umé: No. I want to entertain men and women, I want to raise awareness, and I want

and I want to present in which it is all likely.

Nina Schick: It is without having a doubt a person of the most important revolutions in the potential of human communication and perception. I would say it is analogous to the start of the internet.

Political scientist and technological innovation marketing consultant Nina Schick wrote 1 of the 1st textbooks on deepfakes. She very first arrived across them five yrs in the past when she was advising European politicians on Russia’s use of disinformation and social media to interfere in democratic elections.

Invoice Whitaker: What was your reaction when you initial realized this was attainable and was likely on?

Nina Schick: Nicely, presented that I was coming at it from the point of view of disinformation and manipulation in the context of elections, the point that AI can now be employed to make photos and movie that are bogus, that glimpse hyper practical. I imagined, perfectly, from a disinformation viewpoint, this is a activity-changer.

So significantly, you will find no evidence deepfakes have “transformed the sport” in a U.S. election—but in March 2021, the FBI place out a notification warning that “Russian [and] Chinese… actors are making use of synthetic profile images” — generating deepfake journalists and media personalities to unfold anti-american propaganda on social media.

The U.S. navy, regulation enforcement and intelligence companies have retained a cautious eye on deepfakes for a long time. At a 2019 hearing, Senator Ben Sasse of Nebraska questioned if the U.S. is ready for the onslaught of disinformation, fakery and fraud.

Ben Sasse: When you think about the catastrophic possible to general public have faith in and to markets that could come from deepfake assaults, are we organized in a way that we could probably reply speedy enough?

Dan Coats: We plainly want to be far more agile. It poses a main threat to the United States and one thing that the intelligence community desires to be restructured to tackle.

Due to the fact then, technologies has ongoing moving at an exponential tempo whilst U.S. coverage has not. Attempts by the governing administration and massive tech to detect artificial media are competing with a group of “deepfake artists” who share their most current creations and tactics on line.

Like the online, the first location deepfake technological know-how took off was in pornography. The unhappy fact is the the greater part of deepfakes right now consist of women’s faces, generally famous people, superimposed onto pornographic films.

Nina Schick: The first use scenario in pornography is just a harbinger of how deepfakes can be utilized maliciously in lots of diverse contexts, which are now commencing to arise.

Monthly bill Whitaker: And they are finding improved all the time?

Nina Schick: Yes. The outstanding thing about deepfakes and artificial media is the tempo of acceleration when it arrives to the technological know-how. And by five to 7 yrs, we are essentially on the lookout at a trajectory exactly where any one creator, so a YouTuber, a TikToker, will be in a position to create the same amount of visual outcomes that is only accessible to the most well-resourced Hollywood studio now.

Chris Ume/Metaphysic

The know-how powering deepfakes is synthetic intelligence, which mimics the way people understand. In 2014, scientists for the initially time used desktops to develop practical-hunting faces applying a thing identified as “generative adversarial networks,” or GANs.

Nina Schick: So you set up an adversarial recreation where you have two AIs combating each and every other to try and make the ideal pretend synthetic content. And as these two networks overcome just about every other, a single striving to produce the most effective picture, the other seeking to detect in which it could be greater, you basically stop up with an output that is more and more increasing all the time.

Schick suggests the electricity of generative adversarial networks is on comprehensive display screen at a web page named “ThisPersonDoesNotExist.com”

Nina Schick: Every single time you refresh the page, there is a new image of a person who does not exist.

Every is a 1-of-a-form, totally AI-produced graphic of a human becoming who hardly ever has, and hardly ever will, wander this Earth.

Nina Schick: You can see each and every pore on their facial area. You can see each and every hair on their head. But now consider that technological know-how becoming expanded out not only to human faces, in even now images, but also to video, to audio synthesis of people’s voices and that’s actually wherever we’re heading suitable now.

Bill Whitaker: This is head-blowing.

Nina Schick: Indeed. [Laughs]

Bill Whitaker: What is the positive aspect of this?

Nina Schick: The technology by itself is neutral. So just as bad actors are, with no a doubt, going to be utilizing deepfakes, it is also likely to be utilised by superior actors. So to start with of all, I would say that you will find a incredibly compelling situation to be made for the professional use of deepfakes.

Victor Riparbelli is CEO and co-founder of Synthesia, primarily based in London, a single of dozens of providers utilizing deepfake know-how to rework movie and audio productions.

Victor Riparbelli: The way Synthesia functions is that we have basically changed cameras with code, and at the time you might be working with application, we do a lotta things that you wouldn’t be in a position to do with a standard digital camera. We are continue to extremely early. But this is gonna be a fundamental alter in how we create media.

Synthesia would make and sells “electronic avatars,” employing the faces of compensated actors to produce customized messages in 64 languages… and enables corporate CEOs to deal with staff overseas.

Snoop Dogg: Did someone say, Just Eat?

Synthesia has also helped entertainers like Snoop Dogg go forth and multiply. This elaborate Television professional for European meals supply support Just Take in charge a fortune.

Snoop Dogg: J-U-S-T-E-A-T-…

Victor Riparbelli: Just Take in has a subsidiary in Australia, which is identified as Menulog. So what we did with our know-how was we switched out the word Just Consume for Menulog.

Snoop Dogg: M-E-N-U-L-O-G… Did any person say, “MenuLog?”

Victor Riparbelli: And all of a sudden they experienced a localized model for the Australian industry with no Snoop Dogg acquiring to do just about anything.

Monthly bill Whitaker: So he helps make 2 times the dollars, huh?

Victor Riparbelli: Yeah.

All it took was eight minutes of me examining a script on digital camera for Synthesia to make my artificial talking head, finish with my gestures, head and mouth movements. A different business, Descript, made use of AI to make a artificial variation of my voice, with my cadence, tenor and syncopation.

Deepfake Bill Whitaker: This is the outcome. The words and phrases you might be listening to ended up never ever spoken by the authentic Bill into a microphone or to a digicam. He just typed the terms into a personal computer and they occur out of my mouth.

It could search and seem a small tough around the edges ideal now, but as the technological know-how enhances, the choices of spinning text and illustrations or photos out of skinny air are infinite.

Deepfake Invoice Whitaker: I’m Invoice Whitaker. I’m Monthly bill Whitaker. I’m Invoice Whitaker.

Invoice Whitaker: Wow. And the head, the eyebrows, the mouth, the way it moves.

Victor Riparbelli: It can be all synthetic.

Bill Whitaker: I could be lounging at the beach front. And say, “Individuals– you know, I am not gonna appear in right now. But you can use my avatar to do the get the job done.”

Victor Riparbelli: Possibly in a few yrs.

Bill Whitaker: Never convey to me that. I’d be tempted.

Tom Graham: I feel it will have a massive effect.

The rapid advances in synthetic media have triggered a digital gold hurry. Tom Graham, a London-primarily based law firm who designed his fortune in cryptocurrency, recently commenced a company referred to as Metaphysic with none other than Chris Umé, creator of DeepTomCruise. Their goal: establish application to enable anyone to create hollywood-caliber motion pictures with out lights, cameras, or even actors.

Tom Graham: As the hardware scales and as the versions come to be additional effective, we can scale up the dimension of that design to be an overall Tom Cruise body, movement and almost everything.

Bill Whitaker: Properly, chat about disruptive. I suggest, are you gonna put actors out of jobs?

Tom Graham: I imagine it is a excellent thing if you are a nicely-identified actor right now for the reason that you may well be ready to allow any individual collect data for you to build a edition of you in the foreseeable future in which you could be performing in flicks immediately after you have deceased. Or you could be the director, directing your youthful self in a motion picture or a thing like that.

If you are wondering how all of this is lawful, most deepfakes are deemed guarded no cost speech. Attempts at legislation are all over the map. In New York, business use of a performer’s synthetic likeness devoid of consent is banned for 40 yrs after their demise. California and Texas prohibit misleading political deepfakes in the guide-up to an election.

Nina Schick: There are so several ethical, philosophical gray zones below that we seriously require to imagine about.

Invoice Whitaker: So how do we as a society grapple with this?

Nina Schick: Just being familiar with what’s going on. Due to the fact a large amount of people today even now never know what a deepfake is, what artificial media is, that this is now attainable. The counter to that is, how do we inoculate ourselves and comprehend that this type of material is coming and exists devoid of getting absolutely cynical? Suitable? How do we do it devoid of shedding belief in all genuine media?

That is heading to have to have all of us to determine out how to maneuver in a environment in which looking at is not constantly believing.

Generated by Graham Messick and Jack Weingart. Broadcast associate, Emilio Almonte. Edited by Richard Buddenhagen.